Today I implemented the data format, and calibrated the monitor (that is, three hundred individual measurements, by hand, the old-school way). Been away to see adapting boundary techniques as well.

The monitor is today’s wierd one: Normally, the triode gamma value should be around 2.5 for a CRT (1.1 for an LCD). This old fellow does 2.94. And not just that, it barely does 70% of its original spec in brightness.

If I remember right, the cathode ‘vanishes’ with age, and therefore the distance between the grid and cathode increases. This means that gradient (transconductance) of the valve decreases, so the nice x^2-like graph gets distorted. It took me 6 hours to make the measurement properly. Needed to be in complete and utter darkness, and had to read the photometer by hand.

It was fun, even made a notice on the lab door 🙂

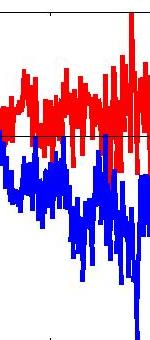

The adaptive boundary setting will have to depend on the luminance level. Tomorrow, I will be able to get more data, and can see the emerging pattern. Need to plot dy/y.

Well done, Zoltan. I’m sorry it was such a nightmare. That is a bit odd though re the gamma-2.94, especially as we have my note that I calibrated it last year (or at least a monitor of the same make and model, and I don’t think I have another) and got 2.24. Is it possible some of this is due to the PC, i.e. is the NVidia graphics card applying some weird gamma of its own?