Much of my work involves studying our “3D” vision, stereo depth perception. Why should we study this? Part of the answer is summed up in this stirring excerpt from Steven Pinker‘s book, How the Mind Works (ch. 4 “The Mind’s Eye” p. 241-2):

“I think stereo vision is one of the glories of nature and a paradigm of how other parts of the mind might work. Stereo vision is information processing that we experience as a particular flavor of consciousness, a connection between mental computation and awareness that is so lawful that computer programmers can manipulate it to enchant millions. It is a module in several senses: it works without the rest of the mind (not needing recognizable objects), the rest of the mind works without it (getting by, if it has to, with other depth analyzers), it imposes particular demands on the wiring of the brain, and it depends on principles specific to its problem (the geometry of binocular parallax). Though stereo vision develops in childhood and is sensitive to experience, it is not insightfully described as “learned” or as “a mixture of nature and nurture”; the development is part of an assembly schedule and the sensitivity to experience is a circumscribed intake of information by a structured system. Stereo vision shows off the engineering acumen of natural selection, exploiting subtle theorems in optics rediscovered millions of years later by the likes of Leonardo da Vinci, Kepler, Wheatstone and aerial reconnaissance engineers. It evolved in response to identifiable selection pressures in the ecology of our ancestors. And it solves unsolvable problems by making tacit assumptions about the world that were true when we evolved but are not always true now.”

What I do

In my research, I’m trying to contribute to our understanding of how visual perception — the experience of “seeing something” — arises from the electrical activity of nerve cells in the brain. Because this is obviously a very big question, I’m concentrating on one small aspect of perception: stereoscopic depth. This is the sort of depth which you see in “Magic Eye” images, or which makes the shark appear to loom out of the screen when you put on special glasses to watch “Jaws” in three dimensions. It depends the fact that your left and right eyes get slightly different views of the world, because they’re in different places on your face. Your brain is able to analyse these small differences to deduce how far away objects are from you. Of course, this isn’t the only way you have of sensing depth; there are many other cues, for example apparent size. But stereopsis is useful for fine depth discrimination within about an arm-length of your body, as you can easily demonstrate by trying to thread a needle with one eye shut. It’s attractive to investigate, because at one level it’s very simple. If you can identify where an object’s image falls in both eyes, then you can draw a line back from each eye, and the object must be where the two lines intersect. So, it seems a lot easier to investigate than more complicated experiences such as recognising your mother-in-law.

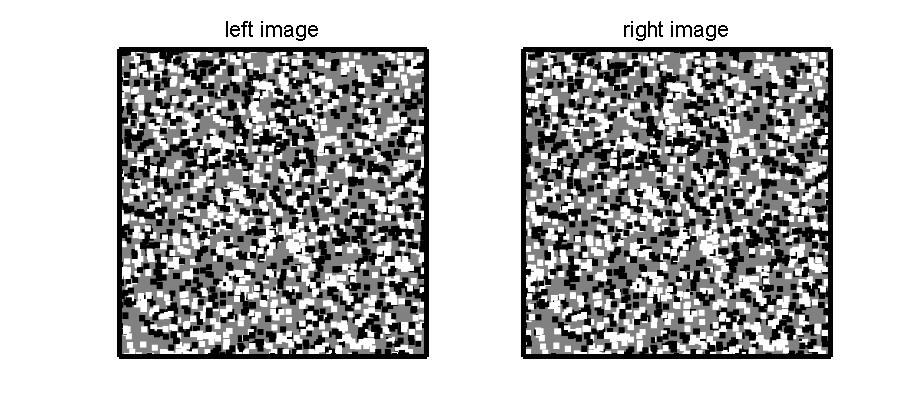

Yet, on closer examination stereopsis throws up a number of problems. Firstly, it depends on knowing the position of an image in both eyes. This is called the stereo correspondence problem, since it’s the problem of working out which point in the left eye corresponds to a given point in the right. This seems fairly straightforward for normal visual scenes. Suppose you are threading a needle and your brain needs to work out how far away the needle is: it just has to identify the image of the needle on the left retina and the image of the needle on the right retina, and work out the difference in their positions. But it turns out that stereopsis doesn’t only work in normal visual scenes. It also works in images like this one:

which are made up of randomly-scattered black and white dots. Here, each black dot in the left eye could potentially “match” any one of hundreds of dots in the right eye. Yet most people can find the right match in a fraction of a second – as we can tell, because they can see depth. This suggests that the stereo correspondence problem is solved very early in visual processing; it doesn’t depend on identifying distinct objects in a scene. This is useful for the researcher, because it means that we probably don’t need to understand object recognition to study stereopsis. Indeed, it may be the other way round: one function of stereopsis may be to help with object recognition. It’s been suggested that this might help a predator break camouflage, for example — suppose a mouse is hiding behind some grass; he may be the same colour as the grass, but a cat’s binocular vision would be able to identify the mouse as a distinct object further away than the grass.

This random-dot example is also interesting because there’s a huge number of ways of matching up the dots between left and right eyes, all of which imply different depths. Mathematically, there is no unique solution to this problem. Yet, our brain gives us the same depth again and again, and furthermore different people tend to see very much the same depth. This suggests that our brains have developed a set of additional constraints to use in solving the correspondence problem, probably reflecting the visual experience we had as babies and small children. For example, we learnt that the world is generally made up of discrete objects, rather than hazy clouds of dots. So, we are biased towards solutions of the correspondence problem in which the computed depth varies smoothly, with only a few sudden jumps at presumed object boundaries. One goal of my research is to understand more about the particular constraints used by the brain in solving the correspondence problem.

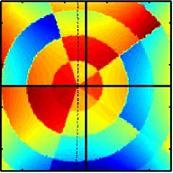

Because our eyes are set apart horizontally in our head, the images they see mainly differ by a horizontal shift — the amount by which any given object is shifted depends on the distance between it and where you are looking. Most of my work concentrates on these horizontal disparities. However, over the past couple of years I’ve also looked at other sorts of differences. For example, under some circumstances there can be differences in the times at which an object appears in the two eyes, and this temporal disparity also leads to a perception of depth. Some of my recent work looks at how temporal disparity is encoded in the brain, and how it affects depth perception. Also, the two images of an object may differ in their vertical, as well as horizontal, position. These vertical disparities are important because in theory, the brain could work out where the eyes are looking just from vertical and horizontal disparity. In practice, the brain can also “feel” where the eyes are looking, from the tension in the muscles which move the eyes, and it uses both sources of information. I’ve recently been considering, from a theoretical point of view, how the brain might efficiently encode vertical disparity.

Although my work is purely theoretical, it depends heavily on what we know about how the brain represents the differences between left and right images. Over the past 30 years, a great deal has been learned about the early stages of this encoding, from electrophysiological experiments on cats and monkeys.

![]()

Electrical activity from single cells in the animal’s brain can be recorded while it views stereoscopic images on a computer, and the experimenter can measure how the cell’s activity changes in response to different simulated scenes. Much of my work in recent years has been trying to develop mathematical models of how these cells’ activity depends on the two retinal images. To this end, I spent four years working with Bruce Cumming, an electrophysiologist at the National Institutes of Health in Maryland, analysing his recordings of neuronal activity and trying to understand the nature of the encoding. A second strand of my work looks at how higher brain areas might use the output of such cells in order to solve the correspondence problem and perceive depth. The ultimate goal is a computer program representing the brain’s stereoscopic system, capable of calculating the stereo depth perceived in any images, reproducing the brain’s performance by using the same algorithms as the brain does itself. Essentially, this would be a machine replica of a small part of the brain.

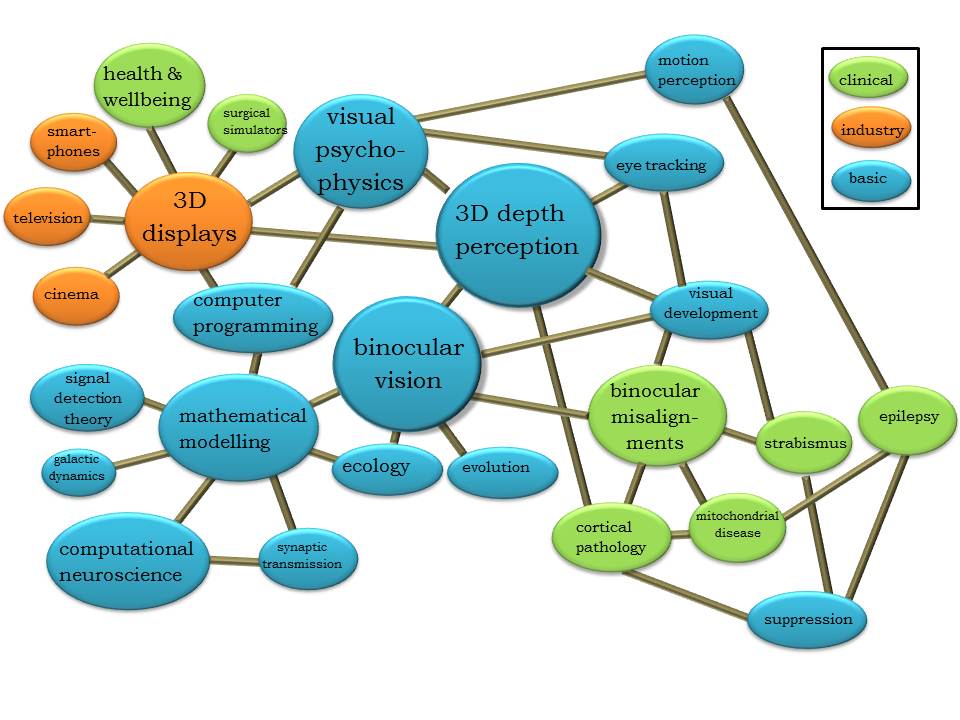

In recent years, my work is increasingly broadening out from the basic understanding of stereo vision into a wide range of related areas. I’ve tried to sketch some of these, and their inter-relations, in the following graphic.

very interesting Jenny.